Hey all,

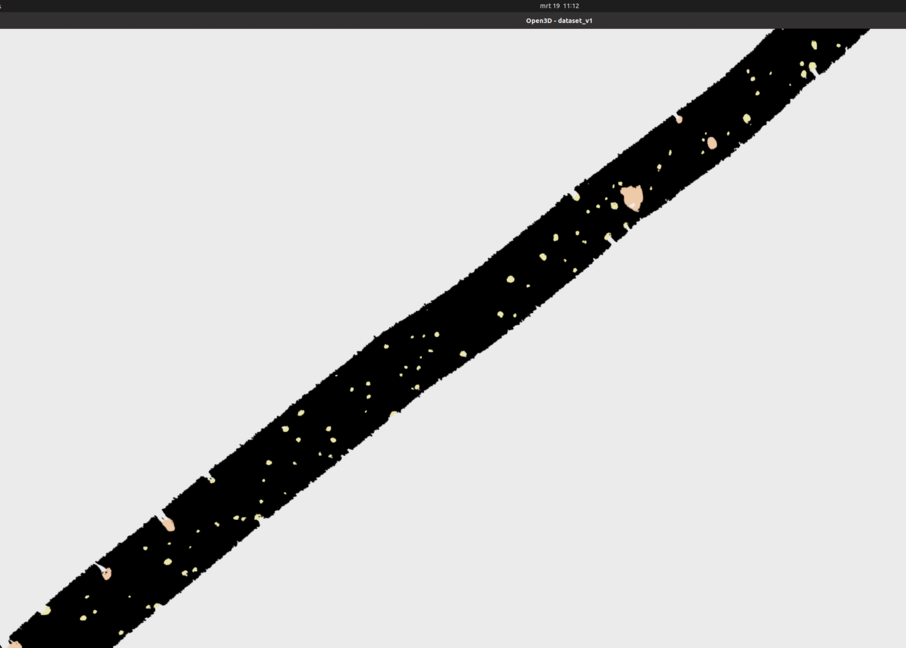

I am quite new to machine learning and was trying to train a model to find objects on a seafloor and ran into peculiar behavior in the learning process. I will go through the steps i did in order to explain it thoroughly in the hope that someone spots where i went wrong in this process.

Firstly I have labelled my dataset according to 3 classes: Seafloor, Big boulders, Small boulders. Each was given a class according to the format of the Custom3D dataloader (x, y, z, class (a 0, 1, or 2)), so I used the Custom3D dataloader.

The visualizer was able to visualize it and show the map of the classes:

The next step was to set up the pipeline (the class weights are the number of occurrences of the corresponding class, is this correct?), this was done with the config file:

dataset:

name: dataset_v1

dataset_path: # path/to/your/dataset

cache_dir: ./logs_randla/cache

test_dir: ./test

test_result_folder: ./testresult_randla

train_dir: ./train

val_dir: ./val

class_weights: [9716789, 688821, 254430]

num_points: 45056

use_cache: true

steps_per_epoch_train: 100

steps_per_epoch_valid: 10

sampler:

name: SemSegSpatiallyRegularSampler

model:

name: RandLANet

batcher: DefaultBatcher

ckpt_path: # path/to/your/checkpoint

dim_feature: 1

dim_input: 3

dim_output:

-16

-64

-128

-256

-512

grid_size: 0.08

ignored_label_inds: []

k_n: 16

num_classes: 3

num_layers: 5

num_points: 45056

sub_sampling_ratio:

-4

-4

-4

-4

-2

t_align: true

t_normalize:

recentering: [0, 1]

t_augment:

turn_on: false

rotation_method: vertical

scale_anisotropic: false

symmetries: true

noise_level: 0.01

min_s: 0.6

max_s: 1.2

pipeline:

name: SemanticSegmentation

adam_lr: 0.001

learning_rate: 0.001

batch_size: 2

main_log_dir: ./logs

max_epoch: 10

save_ckpt_freq: 5

weight_decay: 0.5

scheduler_gamma: 0.9886

momentum: 0.98

test_batch_size: 1

train_sum_dir: train_log

val_batch_size: 1

This, however, resulted in the loss and accuracy constantly jumping around in training:

=== EPOCH 1/200 ===

loss train: 1.108 eval: 1.101

mean acc train: 0.356 eval: 0.167

mean iou train: 0.190 eval: 0.031

total iou train: 0.192 eval: 0.031

total acc train: 0.092 eval: 0.092

=== EPOCH 2/200 ===

loss train: 1.103 eval: 1.123

mean acc train: 0.360 eval: 0.267

mean iou train: 0.189 eval: 0.078

total iou train: 0.191 eval: 0.080

total acc train: 0.221 eval: 0.221

=== EPOCH 3/200 ===

loss train: 1.109 eval: 1.080

mean acc train: 0.368 eval: 0.344

mean iou train: 0.190 eval: 0.198

total iou train: 0.192 eval: 0.198

total acc train: 0.521 eval: 0.521

=== EPOCH 4/200 ===

loss train: 1.088 eval: 1.057

mean acc train: 0.354 eval: 0.356

mean iou train: 0.184 eval: 0.227

total iou train: 0.186 eval: 0.227

total acc train: 0.639 eval: 0.639

=== EPOCH 5/200 ===

loss train: 1.116 eval: 1.090

mean acc train: 0.360 eval: 0.304

mean iou train: 0.188 eval: 0.219

total iou train: 0.190 eval: 0.221

total acc train: 0.601 eval: 0.601

Epoch 5: save ckpt to ./logs/RandLANet_dataset_v1_torch/checkpoint

=== EPOCH 6/200 ===

loss train: 1.114 eval: 1.165

mean acc train: 0.331 eval: 0.237

mean iou train: 0.189 eval: 0.163

total iou train: 0.193 eval: 0.162

total acc train: 0.461 eval: 0.461

=== EPOCH 7/200 ===

loss train: 1.101 eval: 1.170

mean acc train: 0.351 eval: 0.288

mean iou train: 0.187 eval: 0.159

total iou train: 0.189 eval: 0.151

total acc train: 0.410 eval: 0.410

=== EPOCH 8/200 ===

loss train: 1.119 eval: 1.197

mean acc train: 0.329 eval: 0.318

mean iou train: 0.188 eval: 0.149

total iou train: 0.190 eval: 0.147

total acc train: 0.394 eval: 0.394

=== EPOCH 9/200 ===

loss train: 1.105 eval: 1.165

mean acc train: 0.348 eval: 0.274

mean iou train: 0.190 eval: 0.163

total iou train: 0.192 eval: 0.164

total acc train: 0.420 eval: 0.420

=== EPOCH 10/200 ===

loss train: 1.113 eval: 1.201

mean acc train: 0.332 eval: 0.261

mean iou train: 0.188 eval: 0.148

total iou train: 0.190 eval: 0.144

total acc train: 0.401 eval: 0.401

Epoch 10: save ckpt to ./logs/RandLANet_dataset_v1_torch/checkpoint

This would go on forever, with the loss staying roughly the same and accuracy too, as visible in the first 10 epochs. This would also be the same during training of the model using the KPCNN method.

I have tried tweaking the settings but to no avail, am I doing something wrong and what could be the issue?

I hope someone would see where i went wrong, thanks in advance for looking into it!

Note: I can’t increase the batch size any further as that exceeds my video card memory.